The Slow Death of Understanding

More output, less clue.

Where The Magic Stops

The promise of AI-assisted coding was simple: faster development, fewer tedious tasks, and code that writes itself. And in many ways, that promise has delivered, just not how we - or at least I - expected.

I’ve been going deep on “agentic coding” over the holidays. I’ve mostly been sticking with OpenCode, with Oh My OpenCode for a full “agentic workflow,” agent orchestration and all. These tools, and especially Opus 4.5, are really good now. I’ve been blown away with how quickly I’ve been able to churn out code in my hobby projects.

But the deeper I go, the more cracks I see. The shine is fading, and what’s underneath looks more like a fragile illusion than a future-proof revolution.

NOTE

If you’re not sure what “agent orchestration” or “agentic workflow” really means, check out this article by Steve Yegge

AI’s Greatest Hits

If you’ve ever used Claude Code, Cursor, or OpenCode, you’ve probably seen the magic. Need to write tests? Done. Want a docstring for a function? Instantly there. Boilerplate code? Finished yesterday. Need a quick prototype? An agent can knock it out like it’s nothing. AI excels at the tedious, repetitive, and boring parts of development. It’s your eager intern, typing generating away.

And these tools really do speed things up, but only when the stakes are low.

Quantity Over Quality

The flip side of faster typing is that AI writes a lot of code, and that code is often wrong. More code means more opportunity for bugs. We are shipping buggier code faster than ever. AI-generated pull requests are now averaging nearly 11 issues per submission, turning senior developers into full-time janitors.1

Sure, this is obvious to most, but it’s an important caveat. Now we have other AI tools to review all the AI-generated code, and I don’t see that ending well. The sheer quantity is a ticking time bomb. Technical debt at AI scale is a massive trap we’re walking into. Not to mention the glaring environmental cost of all this unnecessary generation, which we’re conveniently sweeping under the rug.

The Agentic Content Mill

There’s another problem that has nothing to do with prompts or models. It’s the culture around this stuff.

Agentic coding is turning into content. Twitter clips, YouTube speedruns, “Watch me ship a whole app in 20 minutes.” Social media rewards bulk. Huge diffs look like progress while quiet maintenance looks like failure. That incentive is poison. It pushes people toward maximum output and minimum ownership.

The data confirms the sentiment. GitClear’s 2025 report found that 2024 was the first year they measured where “Copy/Pasted” lines exceeded “Moved” lines. “Moved” lines are a rough proxy for refactoring. Less moving and more copying is not a good direction.2

Thoughtworks has also called out “complacency with AI-generated code” as a real risk as these assistants get normalized3. So yeah, the tools are impressive, but the surrounding pressure to keep generating is worse. It normalizes treating code like disposable sludge, and someone still has to live in that repo later.

Fighting the Model

Even with good prompts, clear instructions, and multiple agents to cut down on context window usage, the AI sometimes just doesn’t do what you want. You fight it. It forgets things. It misses edge cases. Sometimes it “fixes” a bug by deleting the test. I’ve often found myself thinking, “I could’ve written this myself by now.”

LLMs have become very smart, but they still hinge on keyword-sensitive prompting (looking at you, Gemini). Tell it “add a user profile endpoint” and you get a demo. Tell it “add a secure, tenant-scoped profile endpoint that returns only non-sensitive fields” and you get something closer to reality, but now you’re writing machine-babble instead of shipping.

Try running AI-assisted code review (Cursor, Copilot Review, CodeRabbit) on the same PR twice. You’ll get different results. Sometimes helpful, sometimes noise. The inconsistency makes it hard to trust. In practice, many teams use AI review as a first pass, but humans still do the real work.

Prompt, Pray, Repeat

“Vibe coding” is real. Build a prototype and AI feels like magic. Try to ship and maintain a product and the agentic dream collapses. These tools don’t handle long-term complexity, and their “fixes” introduce regressions.

As one dev put it: “Agentic coding falls flat on its face the moment you try to do real work.”4

Zig, and the Cost of Being Explicit

Zig is almost a counterexample to this whole era. Its docs brag about “no hidden control flow,” “no hidden memory allocations,” and no macros. That mindset is the opposite of agent-driven code floods. Zig forces you to stare at what your program is doing, with no illusions.

That is exactly why agentic tools fall flat in systems languages. In web dev, you can brute-force your way to “it works.” In Zig, the smallest “close enough” decision turns into a leak, a crash, or a performance cliff. The model can generate Zig that looks plausible, but plausibility is not the bar, and we should never let it become the bar.

Rust is the same story, just with different pain. Ownership and borrowing are great engineering, but brutal for “generate until green.” Agents draft Rust that compiles only after you fight the borrow checker, because the model does not feel the constraint - you do. And once it compiles, the next agent edit often breaks the careful little balance you just established. Not to mention the numerous logic errors it (probably) introduced!

The Perfect Target

Web dev is getting hit the hardest by agentic tools for the same reason it’s the easiest place to fake progress. The ecosystem is huge, the abstractions are deep, and the demand for constant output never stops. GitHub has called out TypeScript taking the top spot by contributor count, with AI showing up as part of the story.5 AI is decent at first drafts in React, but it gets shaky fast when you cross into real integration work where context, routing, state, and design constraints collide.6 That is where the code flood becomes a maintenance bill.

The Overreliance Era

These tools got so good that people pivoted to full reliance. That’s a mistake. Mid-2025 research from METR found open-source devs were 19% slower to complete issues with AI assistance, and productivity dropped up to 40% in some workflows.7

This reflects a broader trend. AI pilots outside software have struggled to produce real ROI. Even AI-positive coverage notes teams report at least one significant downside when adopting these tools.8 The hype is loud. The results are shaky.

Adoption Without Disruption

Executives keep talking like this stuff is already changing everything. The reality is a lot smaller and a lot sadder. MIT research summarized by Axios found that 95% of organizations studied got zero return on generative-AI investment, even after tens of billions poured into pilots.9 Reuters’ Breaking Views put it bluntly: “adoption is high, but disruption is low,” and for mission-critical work, most companies still prefer humans.10

Measured outcomes keep shrinking the story. TechPolicy.Press points to a study of 25,000 Danish workers where time savings from LLMs were only 2.8%, and it notes that AI tools also created new work for 8.4% of workers.11 So the pitch is “efficiency,” but the experience is overhead.

And then there is the coping narrative: layoffs. Axios notes AI is not yet a proven head-count reducer, and quotes a researcher calling it a “very convenient explanation for layoffs.”12 Even the loudest AI-first case studies are walking it back. Bloomberg reported that Klarna’s CEO said the AI-driven cost-cutting in customer service went too far, and the company planned hiring so customers can still talk to a real person.13

The Spiral You Can’t Ignore

The agentic hype loop doesn’t stop at code.

More generated code means more CI, more tests, more dependencies, more tokens, and more “agents to review the agents.”

Anthropic has pointed out a related scaling problem from the agent-builder side: tool definitions and tool results can balloon the context before the agent even starts doing the work.14 That’s the hidden cost of “just add another agent.”

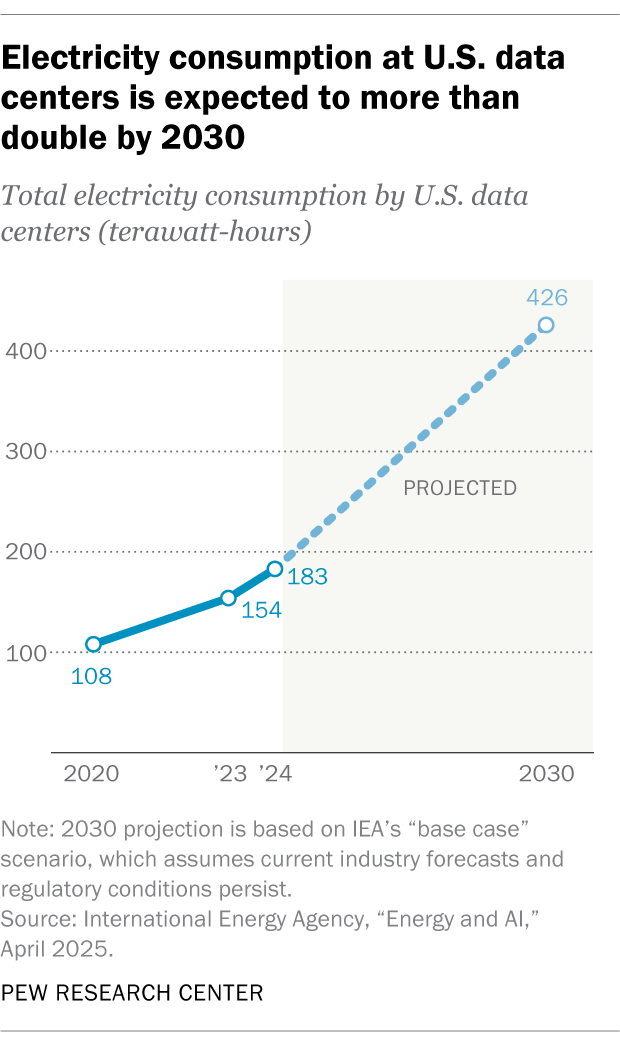

And on the physical side, the compute buildout is not subtle anymore. The IEA projects global datacenter electricity consumption more than doubling to about 945 TWh by 2030, with AI as the biggest driver.15 Pew reports U.S. data centers were about 4% of U.S. electricity use in 2024, with demand expected to more than double by 2030.16 Four percent sounds small until you put it into perspective: that’s roughly the equivalent to the annual electricity demand of the entire nation of Pakistan.

When we normalize pumping out code, we’re also normalizing the infrastructure behind it: more datacenters, more power draw, more water for cooling, more extraction upstream. The “more more more” culture surrounding this tech feels like a deal where everyone pays and no one really wins.

But What About The Shareholders?

The macroeconomic outlook isn’t exactly helping, either. AI is driving up compute demand, but where’s the sustainable business model? Outside of a few companies renting out these LLMs, we’ve yet to see consistent, long-term value in most sectors. What happens when the costs catch up?

I truly don’t think we are ready for what might happen.

A Word of Warning

AI is a tool, not a shortcut. It can help you learn, but it can’t replace learning. It can automate boilerplate, but it can’t architect systems (not well, anyway). It can find patterns, but it doesn’t truly understand what it’s doing.

If you let the AI think for you, you won’t be able to fix what it breaks. And it will break things.

The Hangover

We spent the last two years treating AI as a shortcut, a way to bypass the hard work of thinking. But in systems engineering, there are no shortcuts, only deferred costs.

Use these tools to handle your boilerplate and your docs. Let them write your unit tests (read them). But do not let them design your systems. If you surrender your critical thinking to a probability engine, you’re becoming a passenger in your own codebase. And as the hype fades and the electricity bill comes due, we’re realizing that the only way out of this mess is the same way we got here: writing the code, one deliberate line at a time.

Footnotes

-

Thoughtworks Technology Radar: Complacency with AI-generated code ↩

-

America’s top companies keep talking about AI — but can’t explain the upsides ↩

-

Octoverse: A new developer joins GitHub every second as AI leads TypeScript to #1 ↩

-

Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity ↩

-

AI tools are overdelivering: results from our large-scale AI productivity survey ↩

-

Don’t Be Fooled By Trump’s Plan To ‘Upskill’ Workers To Prepare For AI ↩

-

Klarna Turns From AI to Real Person Customer Service - Bloomberg ↩

-

Pew Research Center: What we know about energy use at U.S. data centers amid the AI boom ↩